Life of a scan

Learn how OXO works under the hood.

OXO has been open-sourced for a while now and this article aims to go into detail on how it works under the hood. We’ll look at the life of a scan as well as agents, what they are and what is their role when running a scan.

Creating a scan

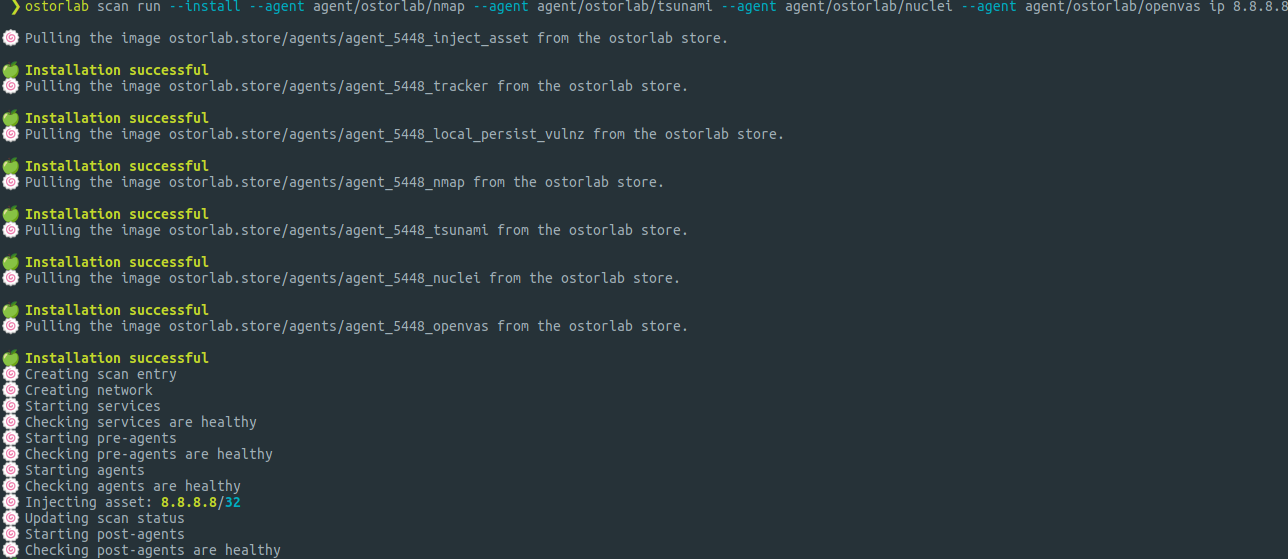

For the purposes of this article, we’re going to run a scan on the IP 8.8.8.8 using the agents Nmap, Tsunami, OpenVas, and Nuclei and look at its life up until it is completed. If this is your first time creating a scan, please refer to this article. To run this scan, you’ll need the following command:

oxo scan run --install --agent agent/ostorlab/nmap --agent agent/ostorlab/tsunami --agent agent/ostorlab/nuclei --agent agent/ostorlab/openvas ip 8.8.8.8

For a full breakdown of the above scan command, check out this tutorial.

First, let’s look at some important concepts before we get into how a scan is actually run from start to finish.

Agents & Agent Groups

OXO relies on agents to perform the actual scanning and these agents are at the core of ostorlab’s detection capabilities. An agent has an agent definition YAML file. The file points to the docker file which can be anywhere in the repo. The file defines a set of attributes specific to the agent, like the path to the docker file, the arguments it accepts, and it’s in and out selectors. Selectors express the type of messages the agent is expecting or cares about. An agent listens to messages coming through its in-selectors & can emit back messages through its out-selectors.

Agents can perform a variety of tasks, from security scanning, file analysis, subdomain enumeration and are able to communicate with others.

The schema of an agent definition file is specified in the agent_schema.json file, which contains a full description of the definition file. The required attributes of every definition file are name, kind (Agent or AgentGroup), in_selectors, and out_selectors.

One challenge of packaging applications using docker is the resulting image size. In the past, you’d need two docker files, one for development, and the other for production. To tackle this problem, Ostorlab proposes the builder pattern to optimize agent images. This ensures that the resulting image is smaller, and eliminates the need to maintain two docker files.

The agent main file, in this case is nmap_agent.py. It is the entry point.

Example of a Dockerfile file for the Nmap Agent

FROM python:3.8-alpine as base

FROM base as builder

RUN apk add build-base

RUN mkdir /install

WORKDIR /install

COPY requirement.txt /requirement.txt

RUN pip install --prefix=/install -r /requirement.txt

FROM base

RUN apk update && apk add nmap && apk add nmap-scripts

COPY --from=builder /install /usr/local

RUN mkdir -p /app/agent

ENV PYTHONPATH=/app

COPY agent /app/agent

COPY oxo.yaml /app/agent/oxo.yaml

WORKDIR /app

CMD ["python3", "/app/agent/nmap_agent.py"]

Structure of an agent

All agents should inherit from the Agent class to access different features, like automated message serialization, message receiving and sending, selector enrollment, agent health check, etc. It is worth noting that an agent can either be a message processor or standalone. Standalone agents can either be long-running or run-once.

An agent that processes a message must implement the process method and declare a set of listen selectors. The selectors are defined in the YAML agent definition file.

Standalone agents don't have any selectors to listen to and must implement its logic in the start method. Use-cases include input injectors, like asset injector or configuration injectors. It also includes long-running processes, like a proxy.

Agents can optionally define a custom health check method is_healthy to inform the run time that the agent is operational and ensure the scan asset is not injected before the agent has completed it’s setup.

An agent that intends to persist data must inherit from the AgentPersistMixin which implements the logic to persist data. The mixin enables distributed storage of a group of agent replicas or for a single agent that needs reliable storage.

Agents can also optionally inherit from the AgentReportVulnMixin which implements logic of fetching entries from the knowledge base, and emitting vulnerability messages.

For an in-depth explanation of how agent implementation is done, refer to this tutorial.

Example of an agent definition file for the Nmap Agent. The attributes defined in this file are specific to each agent.

kind: Agent

name: nmap

version: 0.4.0

image: images/logo.png

description: |

Agent responsible for network discovery and security auditing using Nmap.

license: Apache-2.0

source: https://github.com/Ostorlab/agent_nmap

in_selectors:

- v3.asset.ip.v4

- v3.asset.ip.v6

- v3.asset.domain_name

- v3.asset.link

out_selectors:

- v3.asset.ip.v4.port.service

- v3.asset.ip.v6.port.service

- v3.asset.domain_name.service-

- v3.report.vulnerability

docker_file_path : Dockerfile

docker_build_root : .

args:

- name: "ports"

type: "string"

description: "List of ports to scan."

value: "0-65535"

- name: "timing_template"

type: "string"

description: "Template of timing settings (T0, T1, ... T5)."

value: "T4"

- kind: Can be

Agentfor a single agent orAgentGroupif we wish to support multiple agents. - name: The name of the agent. It should be lowercase and only contains alphanumeric characters.

- version: should respect the semantic versioning convention.

- image: The path to the agent’s’ image or logo.

- description: Description of the agent.

- source: The source repository of the agent.

- in_selectors: The selectors through which the agent will listen to incoming messages.

- out_selectors: The selectors through which the agent will emit back messages.

- args : Arguments we want to pass to our agent, in this case, it is the

ports, which is the list of ports to scan. - docker_file_path: The path to the dockerfile that will assemble the docker image of the agent.

- docker_build_root: Docker build directory for automated release build.

Supported agent mixins

AgentHealthcheckMixin: The agent health check mixin ensures that agents are operational. It runs a webservice (defaults to 0.0.0.0:5000) that must return 200 status and OK as a response. The mixin enables adding multiple health check callbacks that must all return True. The status is checked using the endpointhttp://0.0.0.0:5000/statusand will return OK if all is good, and NOK in case one of the checks fails.

Since the check might fail in a different way, for instance, in case a check callback throws an exception, the web service intentionally does not catch them to make it easier to detect and debug. Health check should check for the presence of the OK and not the absence of NOK.

Typical usage:

status_agent = agent_healthcheck_mixin.AgentHealthcheckMixin()

status_agent.add_healthcheck(self._is_healthy)

status_agent.start()

AgentReportVulnMixin: This mixin implements the logic of fetching entries from the knowledge base, and emitting vulnerability messages.AgentPersistMixin: This mixin implements the logic to persist data. It enables distributed storage for a group of agent replicas or for a single agent that needs reliable storage. A typical use-case is ensuring that a message value is processed only once in case multiple other agent are producing duplicates or similar ones.

Typical usage:

status_agent = agent_persist_mixin.AgentPersistMixin(agent_settings)

status_agent.set_add('crawler_agent_asset_dna', dna)

is_new = not status_agent.set_is_member()

Messages

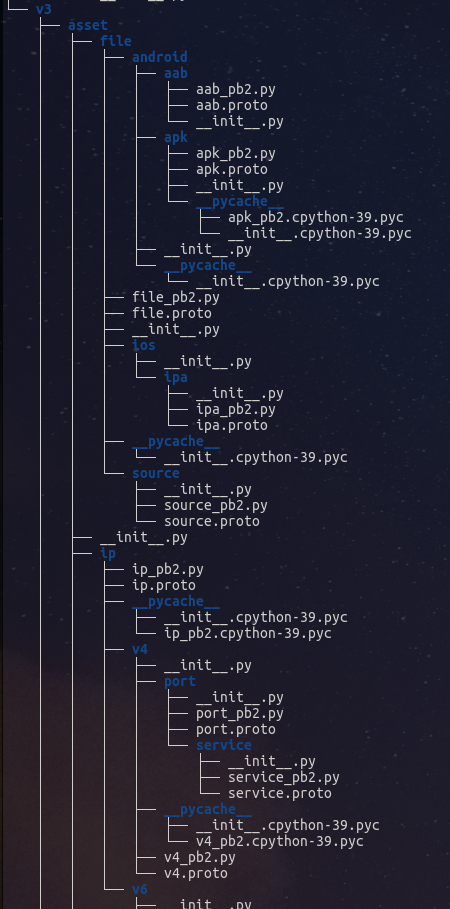

All agents communicate via messages. A message can contain information like an IP address, a file blog or subdomain list. Messages are automatically serialized based on the selector. This makes it easy to transmit/send the messages. The format follows these rules:

- Messages are serialized using the protobuf format. Protobufs are a data exchange format used to serialize structured data. Protobuf is used as it is a binary compact serialization format that support bytes types with no intermediary (expansive) encoding.

- Messages follow a hierarchy where the fields of a child selector must contain all the fields of the parent message. This applies to both incoming and outgoing messages. For instance if the message of selector

/foo/borhas the definition:

{

color: str

size: int

}

The message with selector /foo/bar/baz must have the fields color and size, for instance:

{

color: str

size: int

weight: int

}

The fields expected in a particular message, their types and whether these fields are required or not, are specified using protobuf. A schema for a particular use of protocol buffers associates data types with field names, using integers to identify each field.

Example of a protobuf file for Nmap’s v3.asset.ip.v4.port out-selector

syntax = "proto2";

package v3.asset.ip.v4.port;

message Message {

optional string host = 1;

optional string mask = 2;

optional int32 version = 3 [default = 4];

optional uint32 port = 5;

optional string protocol = 6;

optional string state = 7;

}

All protos are defined in ostorlab > agent > message > proto > v3. Each folder in v3 is named after its selector. For example, the folder asset contains all the protos for selectors that start with v3.asset*.

Structure of the Message data class

The message data class is in charge of data serialization and deserialization based on the source or destination selector. This data class has two methods, from_data and from_raw. These methods provide a convenient way to avoid directly handling protobuf messages and the not so friendly protobuf API.

from_data: Generates a message from structured data and destination selector (target selector used to define the message format) and returns a message with both raw and data definitions. The selector passed to this method must specify the version in use.from_raw: Generates a message from raw data (raw data to be deserialized to a pythonic data structure) and source selector, and returns a message with both raw and data definitions. The selector must specify the version in use.

Scalability

Agents support running and dynamically multiple replicas. The agent SDK has the tool to implement multiple instance functionality, like distributed locking, distributed storage, and message load balancing.

Agents can be dynamically scaled by looking up the docker service name and running the command:

docker service scale <service_name>=<replicas_count>

It is also possible to set the initial replicas count directly when running a scan in the agent group definition YAML file.

The Local Runtime

Ostorlab has support for multiple runtimes and ships with a local runtime. The local runtime runs locally on docker swarm thanks to its ease of use and lightweight properties. Support for multiple runtimes makes it possible to add other runtimes like running the scan agents on top of Kubernetes. The runtimestarts a vanilla RabbitMQ service, a vanilla Redis service, starts all the agents listed in the AgentRunDefinition, ensures that they’re healthy, and then injects the target asset.

1. Creating the Database

Before starting the docker swarm service, the runtime performs some checks to verify that docker is installed, that docker is working, and the user has permission to run docker.

If we pass all the checks, the runtime then instantiates a SQLite database, creates the necessary tables, and then creates the scan instance in the database. The database will be used to store the scan results locally. The database is located in the .ostorlab private directory, and stored as a db.sqlite file.

2. Creating the services

The runtime creates a docker swarm network where all services and agents can communicate and then spawns a local RabbitMQ service that serves a message bus, and a local Reddis service for temporary scan data storage and distributed locking. Before going any further, the runtime checks if the RabbitMQ and Redis services are running and healthy. If either of the services are not healthy, an UnhealthyService exception is raised.

3. Starting the pre-agents

Pre-agents are agents that must exist before other agents. This applies to all persistence agents that can start sending data at the start of the agent.

Supposing that the services are healthy, the runtime starts running the agents in 3 phases. This is because some agents must run before all agents start, like the tracker that keeps track of the scan progress. And some agents must run after all agents are started and working, like the inject asset agent. The next step is to verify that the pre-agents are healthy, and then raising an AgentNotHealthy exeption if they’re not.

4. Starting the agents

After verifying that the pre-agents are healthy, it’s time to start the agents that are listed in the agent group definition. An agent group definition is a set of agents and their configurations. For each agent in the agent group definition, a series of steps happens. First, we can optionally pass extra docker configs and mounts before checking if the agent is installed (if the image is present in the docker container), and then instiating a runtime agent which takes care of consolidating the agent settings and agent default definition, then creating the agent service.If the --follow flag was passed when creating the scan, the runtime starts streaming the logs of the agent service. If any of the agents is not healthy, we raise an exception.

5. Injecting the assets

To inject the asset, we first create the agent settings for the agent/ostorlab/inject_asset agent and then pass it to the AgentRuntime class, together with the the docker client, MQ service, and Redis service. The AgentRuntime class takes care of consolidating the agent settings and agent default settings. The class returns a runtime agent on which we call the create_agent_service method which creates a docker agent service with the proper configs and policies. The scan progress is then set to IN_PROGRESS.

6. Starting the post agents

Ok! Just one more step before we can be sure that the scan has been successfully created. We have to start the post agents, which are agents that must exist after other agents . This applies to that tracker agent that needs to monitor other agents and handle the scan lifecycle. We then check that the post agents are healthy and if they are, then the scan was created successfully.

Any exception that is thrown during the steps explained above will cause the scan to be stopped.

7. Processing the messages

When the run method is called to start running the scan, starts the health check, and starts listening to new messages. Each agent publishes and consumes to and from the queue, and only processes messages of the selctors specified by the agent. Similarly, when the agent is done processing the message, it sends a message to all listening agents on the specified selector. Let’s take a look at how the agents publish to and consume from the queue.

- Make sure to create a scan if you haven’t already done so using

ostorlab scan run --install --agent agent/ostorlab/nmap --agent agent/ostorlab/tsunami --agent agent/ostorlab/nuclei --agent agent/ostorlab/openvas ip 8.8.8.8

- List all containers that are up and running using

docker ps

- Connect to the RabbitMQ container using

docker exec -it <container_id> bash

container_id is the ID of our rabbitMQ container.

- To list the queues, we use the command:

rabbitmqadmin list queues name node messages messages_ready messages_unacknowledged message_stats.publish_details.rate message_stats.deliver_get_details.rate message_stats.publish

Example output of the above command

| name | node | messages | messages_ready | messages_unacknowledged | message_stats.publish_details.rate | message_stats.deliver_get_details.rate | message_stats.publish |

|---|---|---|---|---|---|---|---|

| inject_asset_queue | rabbit@bb2486830037 | 0 | 0 | 0 | |||

| local_persist_vulnz_queue | rabbit@bb2486830037 | 0 | 0 | 0 | 0.0 | 0.0 | 1 |

| nmap_queue | rabbit@bb2486830037 | 1 | 0 | 1 | 0.0 | 0.0 | 1 |

| nuclei_queue | rabbit@bb2486830037 | 0 | 0 | 0 | 0.0 | 0.0 | 1 |

| openvas_queue | rabbit@bb2486830037 | 1 | 1 | 0 | 0.0 | 1 | |

| tracker_queue | rabbit@bb2486830037 | 0 | 0 | 0 | |||

| tsunami_queue | rabbit@bb2486830037 | 1 | 0 | 1 | 0.0 | 0.0 | 1 |

Reporting of vulnerabilities

In case the agent intends to report back a vulnerability, it can easily do so by passing the Ostorlab’s Knowledge Base Entry to the report_vulnerability method that fetches the details of an entry from the knowledge base, and emits a vulnerability message.

The embedded knowledge base is not obligatory and the API support persisting arbitrary vulnerability entries.

Example of Nmap reporting a vulnerability:

scan_result_technical_detail = process_scans.get_technical_details(scan_results)

if normal_results is not None:

technical_detail = f'{scan_result_technical_detail}\n```xml\n{normal_results}\n```'

self.report_vulnerability(entry=kb.KB.NETWORK_PORT_SCAN,

technical_detail=technical_detail,

risk_rating=vuln_mixin.RiskRating.INFO)

The reported vulnerabilities can be accessed using the following command:

ostorlab vulnz describe -s [scan-id]

This article was a deep dive into how Ostorlab works behind the scenes. As the platform is continuously evolving, the concepts discussed here may evolve to address more use cases and future needs.